In this Climate Tech Special of AI and ML From the Trenches series (see earlier part 1 and part 2, and a GenAI special), we’ll look at how teams at Equilibrium Energy and Perennial are using Metaflow to build systems that pioneer techniques in the realm of sustainable power generation and satellite imaging for soil-based carbon removal, respectively.

Besides these two companies, Metaflow is used by a number of other climate tech companies, such as Natcap, a nature intelligence company spun out of Oxford University, that builds products to help corporations manage nature risk, and Dendra Systems that uses smart tree-planting drones to fight deforestation. We hope to hear from them in future episodes!

Building a Modern Power Company Using Data Science and Metaflow: Equilibrium Energy

Adam Merberg, a machine learning engineer at Equilibrium Energy, recently spoke at our Metaflow OSS office hours, about how they leverage Metaflow for operational efficiency and to make their data scientists more productive. Equilibrium is on a climate-driven mission to electrify everything and utilizes ML/AI for much of their work, including

- Grid-scale battery operation,

- “Virtuals” trading, and

- Participation in energy and power markets.

Life before Metaflow

When Adam started at Equilibrium, the organization was orchestrating production ML pipelines using Kubeflow on Argo Workflows. Machine learning experimentation used a disparate collection of ad hoc solutions, including scripts on EC2 instances and Ploomber. In Adam’s words, the team “used mostly different code for experimentation and production”, leading to painful reimplementations and reconciliations between production and experimentation code.

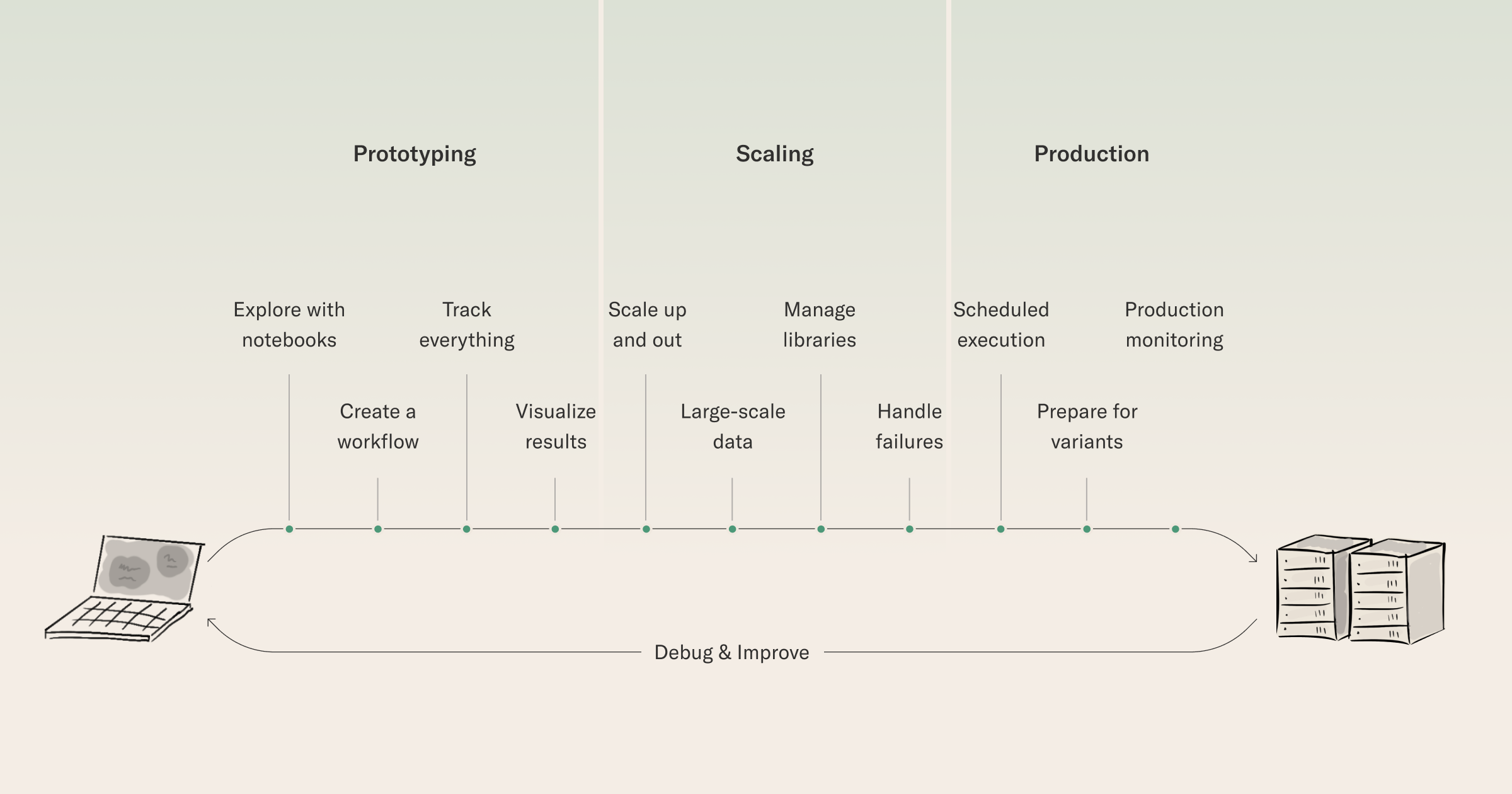

For anyone who knows how we view things at Metaflow and Outerbounds, we want to make sure there are as few changes between your experimentation and production code as possible, in order to close the loop between prototyping and production:

Evaluating New Tools

In late 2022, Equilibrium evaluated several orchestration tools, such as MLFlow, Ray, Metaflow, Kedro, and more. In particular, they were looking for an orchestrator that would facilitate:

- Running the same code locally and in the cloud at scale,

- Running DAG-based pipelines,

- Parametrizing pipelines, and

- Tracking and visualizing ML pipelines.

After evaluating many tools, they eventually chose Metaflow. But why?

Why Metaflow

The team at Equilibrium chose Metaflow because not only did it check all of their boxes: you can run the same code locally and at scale in the cloud (side note: Metaflow works with Apple Silicon, which most of their team uses locally), you can run DAG-based pipelines, parametrize pipelines, and track and visualize entire workflows; but there was extra functionality that also won them over:

- The support for Argo Workflows, which they already used;

- In-built project management functionality, such as

- The resume functionality, which dramatically simplifies debugging, as you can restart failed runs with any changes you have made.

Forecasting for Battery Operations

Their first mature Metaflow use case was forecasting for battery operations. They were excited to be able to use the same modeling code for both experimentation and production, along with being able to use Argo workflows for production orchestration with a time-based @schedule).

They used various Metaflow extensions for

- Error handling,

- Environment setup, and

- Visualizing artifacts (using Metaflow cards!).

They were also able to get their humans-in-the-loop in clever ways, crediting the Metaflow CLI utility, along with Metaflow’s Argo events integration to trigger a comparison of newly trained models against the baseline of the currently deployed model.

Experimentation at Equilibrium

In terms of being able to experiment and iterate rapidly on their models, Metaflow allowed the team to build workflows locally, on Kubernetes and/or on Argo workflows, using essentially the same code. They also reported the ease with which they could scale experimental and production workflows, using Metaflow’s foreach construct for easy parallelization. If that’s not enough, they enjoyed the use of custom decorators for including local changes in cloud workflows.

What’s Up Next at Equilibrium

After these initial proof points, the team at Equilibrium is excited to support many more use cases with Metaflow. They’ll also

- Continue to further adopt Argo events (to increase the modularity of their pipelines, for example),

- Use GitOps for both modeling and pipeline deployment with ArgoCD.

If that’s not enough, there’s now an organizational push for broader Metaflow adoption. First up, they’ll be replacing manually provisioned EC2 instances with Metaflow for virtual trading. They’re also performing optimization with Julia and getting started with using Metaflow for more power systems science.

Perennial Earth: Leading the Charge in Soil-Based Carbon Removal Verification

In a recent presentation at Metaflow Office Hours, Bennett Hiles, a software engineer at Perennial Earth, shared insights into the development of their soil-based carbon removal verification platform. With over a year of experience at Perennial Earth and previous roles at companies like Coinbase and Button Inc., Bennett discussed how the platform is changing carbon verification in agriculture.

Perennial Earth: Pioneering Carbon Credits for Soil

Perennial Earth's goal is to secure carbon credits for soil, using satellite imagery, machine learning (ML) algorithms, and raw soil samples. By combining these technologies, Perennial Earth aims to make carbon verification accessible and cost-effective for farmers and landowners, contributing to sustainable land management practices.

From Metaflow to AWS Step Functions: Orchestrating Scalability

Bennett's team uses Metaflow for orchestration, in addition to AWS Step Functions paired with AWS Batch for scalability. This move allowed them to efficiently manage their workload by leveraging AWS's cloud infrastructure. With AWS Step Functions, the team gained the flexibility to handle larger workloads, ensuring seamless operation even during periods of increased demand. Bennett stated explicitly that, while Argo is a great modern tool for many use cases, Step Functions is perfect for their team at Perennial, which is a small team that wants to be able to scale their work quickly:

- no idle costs, they just pay for what they run;

- Step Functions are battle tested with amazing uptime;

- Metaflow gave them an easy interface for developing with Step Functions.

Addressing Limitations: Scaling Beyond Default Constraints

Despite the advantages of AWS Step Functions, Bennett highlighted limitations such as the default maximum concurrency of 40. To overcome this challenge, he proposed leveraging distributed mode to support up to 10,000 iterations. This approach enables the team to scale their operations efficiently, ensuring that they can handle diverse and demanding workloads with ease. Since then, the Metaflow team has merged improvements made by our friends at Autodesk here and we can support a very large foreach - 100k+ with Metaflow on Step Functions now!

Looking Ahead: Innovating for Sustainable Impact

Bennett emphasized the importance of continuous innovation in driving sustainable impact. Perennial Earth remains committed to pioneering solutions that address environmental challenges effectively. By embracing emerging technologies and staying ahead of industry trends, the team aims to lead the way in carbon verification and soil-based carbon removal, contributing to a more sustainable future for agriculture and the environment.

Want to share your ML platform story?

We host casual, biweekly Metaflow office hours on Tuesdays at 9am PT. Join us to share your story, hear from other practitioners, and learn with the community.

Also be sure to join the Metaflow community Slack where many thousands of ML/AI developers discuss topics like the above daily!