Thanks to Outerbounds’ collaboration with NVIDIA, Outerbounds users can now start building AI-powered applications using NVIDIA NIM inference microservices. NIM microservices offer top-performing production AI containers, packaging popular generative AI and LLM models like Llama 3 and Stable Diffusion for seamless deployment in any environment.

Outerbounds is glad to be one of the first companies integrating NIM microservices natively in the platform. With NIM on Outerbounds, developers can create end-to-end applications powered by LLMs and GenAI on a robust, developer-centric platform that is deployed securely across cloud accounts and on-premise clusters with transparent all-you-can-eat pricing.

LLMs as an application building block

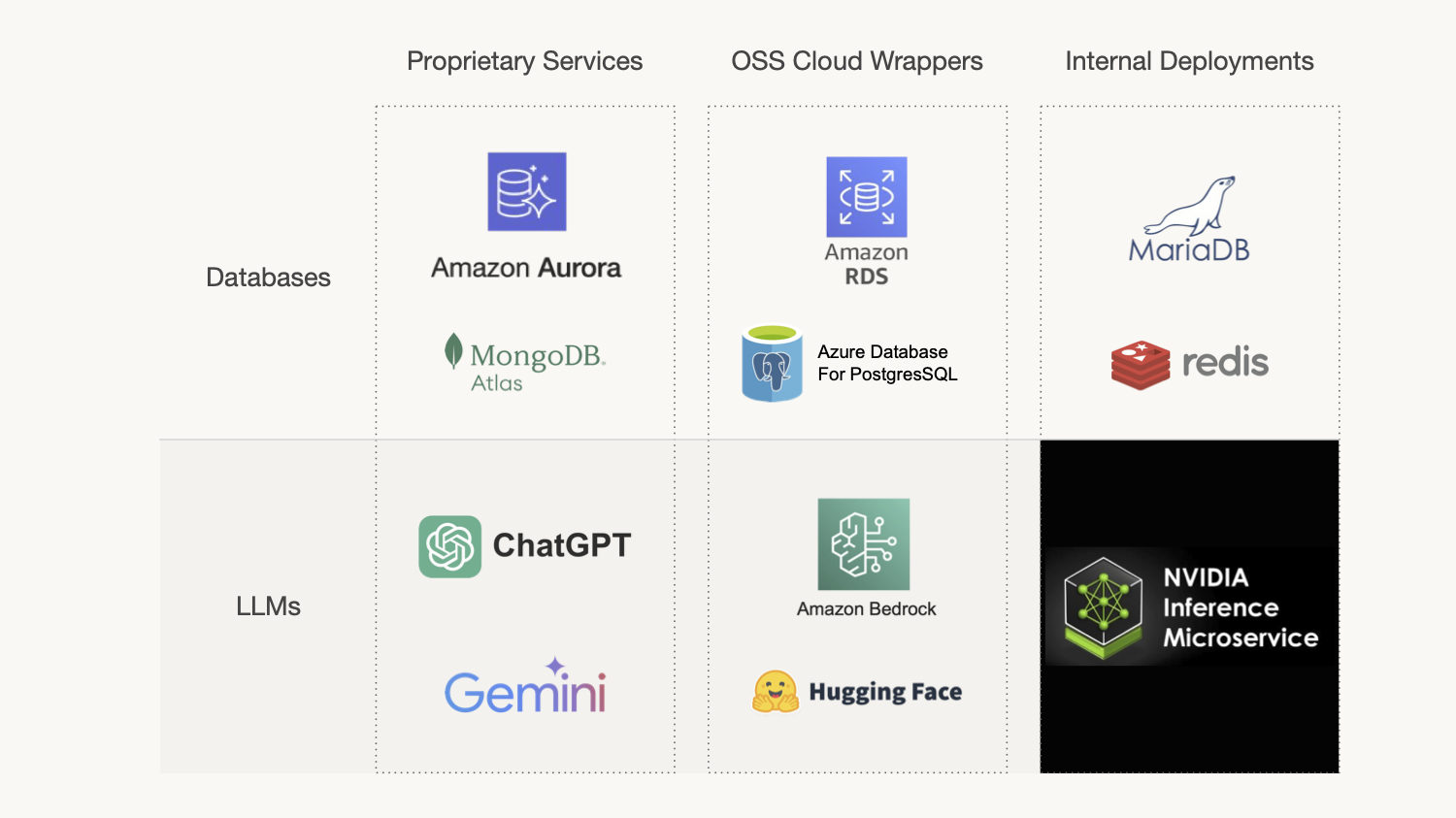

Imagine a world where LLMs become ubiquitous building blocks for systems, similar to databases today.

Analogous to proprietary database services like MongoDB Atlas today, we will see specialized LLM vendors, such as OpenAI or Anthropic, continuing to provide proprietary models as a service. Correspondingly, services like Amazon Bedrock will provide open-weight LLMs as cloud services, similar to what Amazon RDS has done with open-source databases.

While proprietary databases and databases-as-a-service have numerous well-justified use cases, most databases today are hosted and operated internally at companies’ (cloud) premises. Why is this the case?

- Cost - metered services can be cost-effective for certain workloads, but they can be extremely cost-prohibitive in other cases.

- Performance - while modern database services scale well, they are many layers removed from the actual workloads and applications, which can result in lower throughput and higher latency.

- Operational concerns - external services are black boxes that are controlled by 3rd parties - there is limited visibility to the behavior of the system and changes can happen abruptly.

- Speed of innovation - external vendors may not be able to adopt the latest technologies as fast as internal deployments.

- Privacy - in many scenarios, sending data outside the company’s premises is a non-starter.

These concerns apply to LLMs as well. For most companies, developing and deploying an LLM stack from scratch or from open-source building blocks is impractical due to the effort and deep expertise required. Similar to databases, a preferable solution might be to use a pre-packaged, pre-configured, and optimized container by a trusted vendor, which can be freely deployed in the company’s (cloud) premises and used without limitations.

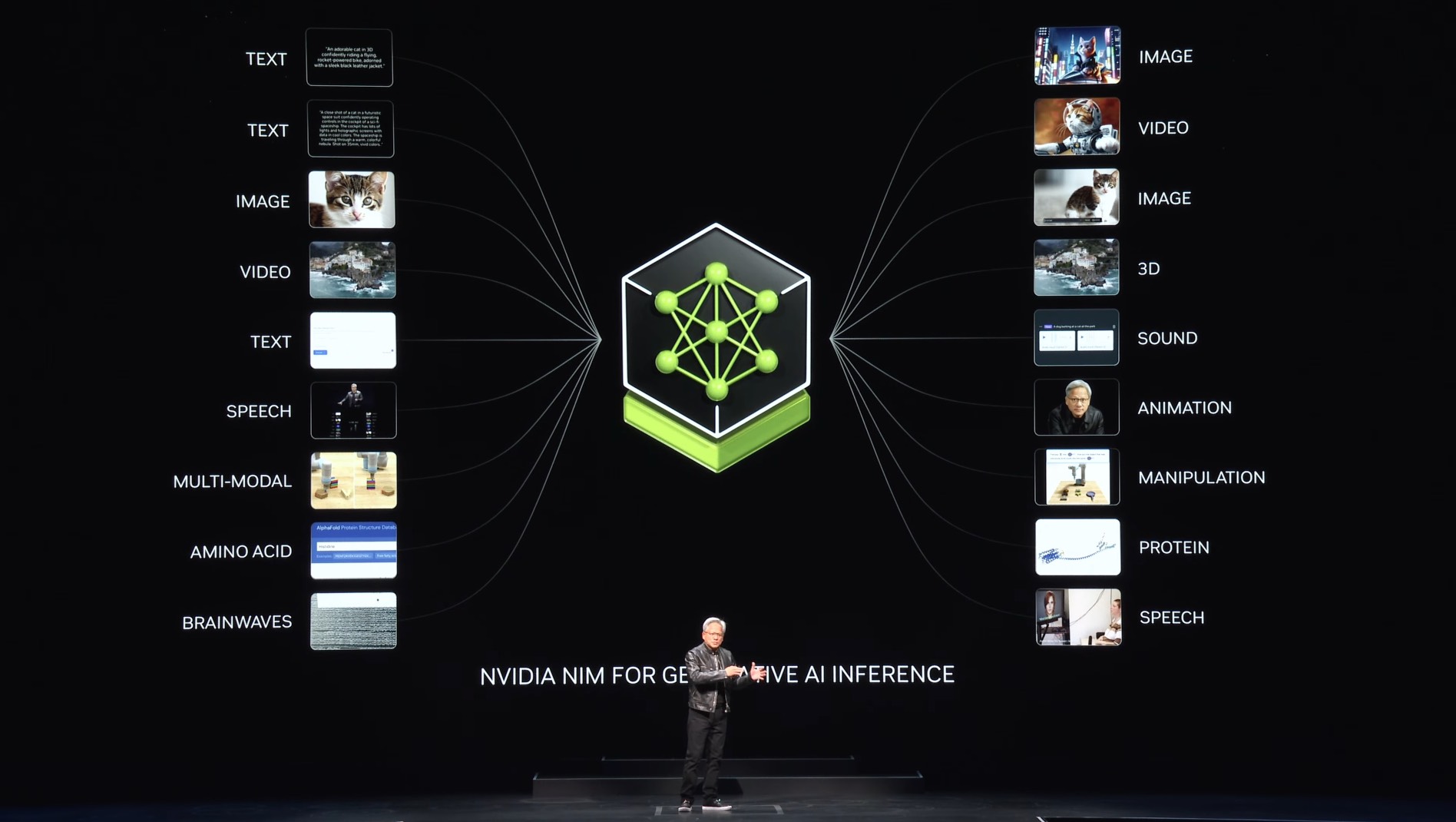

Enter NVIDIA NIM microservices

NVIDIA NIM microservices provide such a solution for generative AI. Similar to a highly optimized database engine provided by a vendor with deep technical expertise, NIM containers provide state-of-the-art generative AI models in a high-performing, optimized container.

Compared to other LLM endpoints, NIM provides the same benefits as internally deployed, vendor-packaged databases:

- Unmetered cost,

- flexible deployments that match your workloads,

- deployment in the privacy of your own (cloud) premises,

- with more control over SLA, change management, and better operational visibility,

- optimized and supported by a trusted vendor.

Crucially, from the user’s point of view, they work exactly the same as other LLM services. NIM containers provide OpenAI-compatible APIs, making it easy for developers to build real-world AI applications. For inspiration, take a look at these NIM examples from the healthcare sector.

NVIDIA NIM on Outerbounds

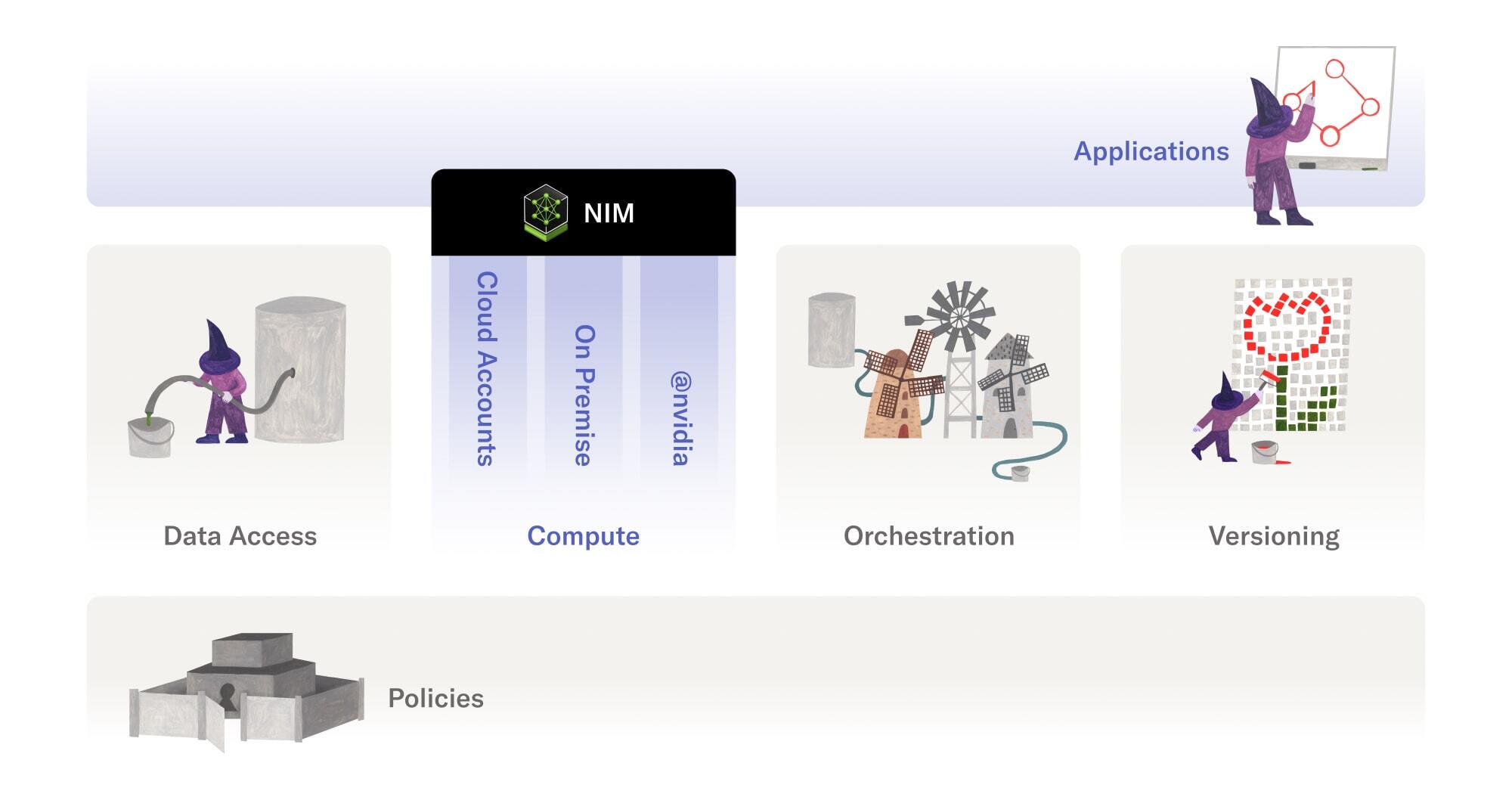

We believe that LLMs will be ubiquitous building blocks in applications, working in concert with all the other parts of the ML/AI infrastructure stack. Hence, building on a long-term collaboration with NVIDIA, we are excited to offer NIM microservices natively in the platform.

While there are many ways to experiment with LLMs today, Outerbounds shines at production use cases that access large amounts of data from data warehouses, packing custom business logic and ML models and LLMs in automated, continuously running workflows, which require that all parts of the system work together seamlessly and securely.

We believe that data-intensive systems of the future will be built on a foundation like this which includes secure data access, flexible compute layers, with an option to include LLMs and traditional ML wherever necessary, all orchestrated in an easily observable and highly-available manner, with a fanatic focus on the developer experience.

Previously, we have been working towards this goal by enabling you to

- access all-you-can-eat compute across clouds and on-prem resources,

- access GPUs directly from the

@nvidiacloud, - provide complete visibility and levers to minimize cost,

- observe systems end-to-end, and,

- pave the path from prototype to production.

Today, thanks to NIM, we are happy to add unlimited, state-of-the-art LLM models in the mix. Similar to compute in Outerbounds, you can access NIMs with limitless all-you-can-eat pricing, in contrast to per-token pricing typical in existing LLM APIs, so you can process hundreds of millions of documents with LLMs without breaking the bank.

Concretely, workloads that would cost thousands of dollars to process with e.g. OpenAI APIs can now be handled with a fraction of the cost. Moreover, you can also increase throughput through unlimited horizontal scaling, working around rate limiting in the existing vendor APIs.

Stay tuned for case studies and benchmarks!

Start evaluating today

You can start evaluating NVIDIA NIM containers easily in Outerbounds - no separate subscriptions or API keys are needed. They can be tuned and scaled based on your specific workloads and available hardware resources, and deployed on Azure, Google Cloud, AWS, on-prem, or the NVIDIA cloud integrated in Outerbounds.

To see all this action, start your free 30 day trial today!