AWS Managed with Terraform

We support a Terraform module that automates the deployment of infrastructure to scale your Metaflow flows beyond your laptop.

The module contains configuration for:

- Amazon S3 - A dedicated private bucket to serve as a centralized storage backend.

- AWS Batch - A dedicated AWS Batch Compute Environment and Job Queue to extend Metaflow's compute capabilities to the cloud.

- Amazon CloudWatch - Configuration to store and manage AWS Batch job execution logs.

- AWS Step Functions - To allow scheduling Metaflow flows on AWS Step Functions.

- Amazon Event Bridge - A dedicated role to allow time-based triggers for Metaflow flows configures on AWS Step Functions.

- Amazon DynamoDB - A dedicated Amazon DynamoDB table for tracking certain step executions on AWS Step Functions.

- AWS Fargate and Amazon Relational Database Service - A Metadata service running on AWS Fargate with a PostgresSQL DB on Amazon Relational Database Service to log flow execution metadata, as well as (optional) UI backend and front end app.

- Amazon API Gateway - A dedicated TLS termination point and an optional point of basic API authentication via key to provide secure, encrypted access to the Metadata service.

- AWS Identity and Access Management - Dedicated roles obeying "principle of least privilege" access to resources such as AWS Batch.

- AWS Lambda - An AWS Lambda function that automates database migrations for the Metadata service.

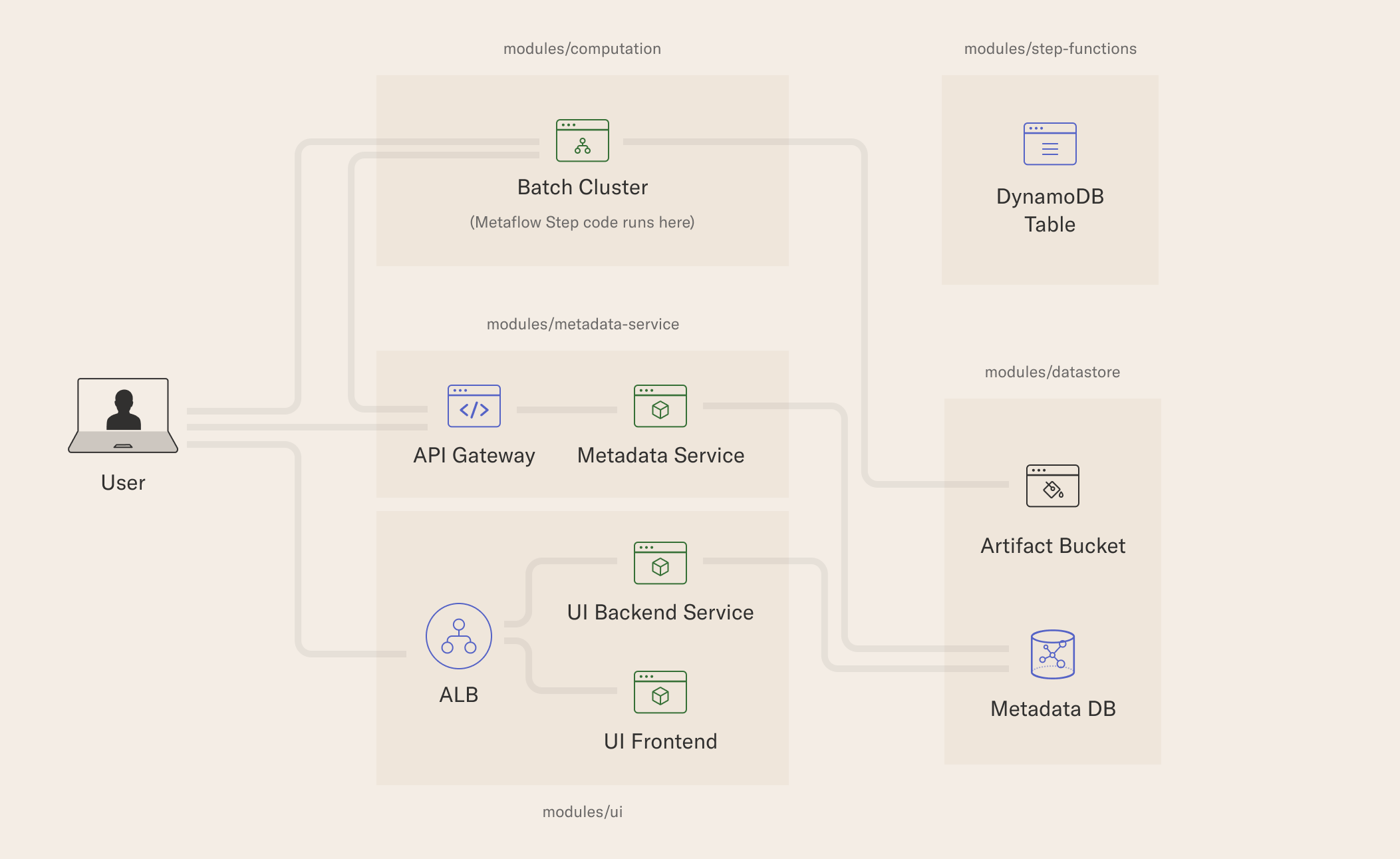

This Terraform module consists of several submodules that can be used separately as well.

You can see the details in the README in the repository

Resources used in Terraform Modules that deploy Metaflow with AWS Batch