GenAI, LLMs, and GPUs

Harness GPUs for deep learning, LLMs, and Generative AI cost-efficiently.

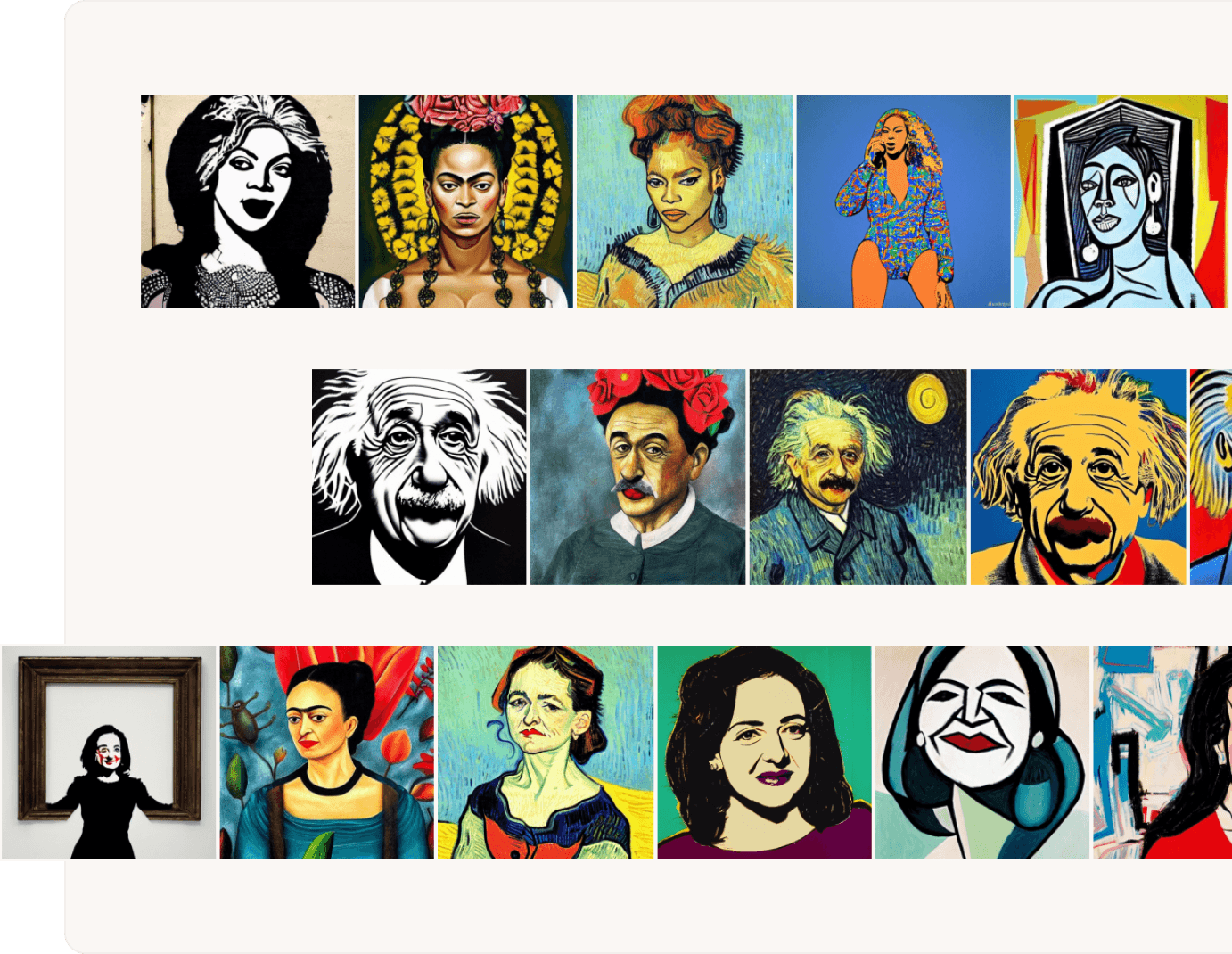

Develop innovative AI-powered apps

Experiment, innovate, and develop unique AI-powered apps. Take control of your models with custom AI workflows.

Use the latest open-source foundation models from HuggingFace. Fine-tune them PyTorch and Deepspeed using your own instructions and datasets.

Train and fine-tune on GPU clusters

Experiment on a GPU-powered cloud workstation, fine-tune foundation models and LLMs on an auto-scaling GPU cluster or use your own GPU resources. Parallelize training over multiple GPUs, monitoring utilization right in the UI.

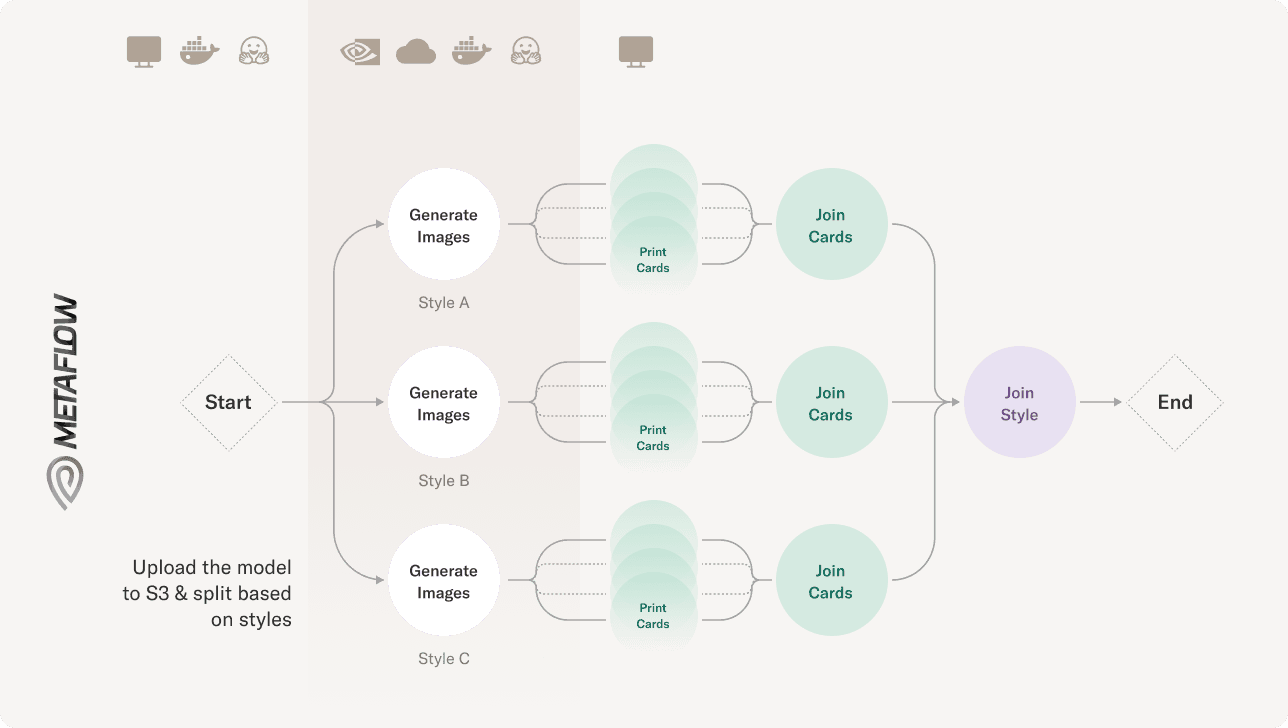

Operationalize AI

AI doesn’t build and run itself. You need the full stack of ML infrastructure to develop and deploy production-grade AI workflows.

Use Metaflow to develop workflows, feed them with data, run compute, and leverage versioning to track lineage from fine-tuned models to their foundations.