Structuring projects

Packaging code and its dependencies for reproducible execution can be a complex task. Fortunately, Metaflow and Outerbounds simplify this process. By following the guidance on this page, you can ensure your projects are structured for rapid iterations during development, reproducible, and ready for production.

Packaging software

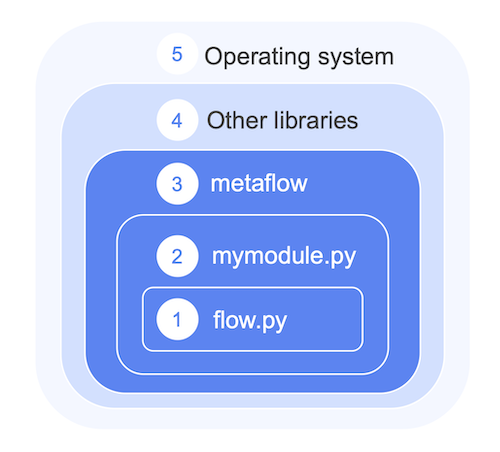

A typical Metaflow project consists of five layers of software:

- A Metaflow flow defined in a Python file.

- Custom Python modules and packages that contain the project-specific code.

- The Metaflow library itself and Metaflow extensions installed.

- 3rd party libraries and frameworks used by the project.

- The underlying operating system with hardware drivers installed, especially CUDA for GPUs.

Metaflow takes care of packaging the layers 1-3 (the dark blue area) automatically, which, not coincidentally, are the parts that are subject to most frequent changes during development. Depending on the needs of your projects and organization, you have a few different paths for managing external libraries and low-level concerns such as device drivers - the layers 4 and 5 - which are covered in detail in Managing Dependencies.

Example: Visualizing fractals

To demonstrate a typical project, let's create a flow that visualizes a fractal using two

off-the-shelf libraries, pyfracgen and matplotlib.

We encourage you to follow Python best practices when designing your project: Use Python modules and packages to modularize your code in logical components.

For instance, it makes sense to create a dedicated module for all the logic related to

fractal generation. Save this snippet in a file, myfractal.py:

def make_fractal():

import pyfracgen as pf

from matplotlib import pyplot as plt

string = "AAAAAABBBBBB"

xbound = (2.5, 3.4)

ybound = (3.4, 4.0)

res = pf.lyapunov(

string, xbound, ybound, width=4, height=3, dpi=300, ninit=2000, niter=2000

)

pf.images.markus_lyapunov_image(res, plt.cm.bone, plt.cm.bone_r, gammas=(8, 1))

return plt.gcf()

Why separate modules and packages?

Creating a separate module or a package composed of multiple modules offers several benefits:

You can develop each module independently. For instance, you can test and develop

make_fractal, say, in a notebook simply by adding a cell:import myfractal

myfractal.make_fractal()You can use standard Python testing tools, such as

pytest, to unit test the module.You can share the module between multiple flows and other systems, encouraging reusability and consistent business logic across projects.

Using a custom module in a flow

Save this flow in fractalflow.py in the same directory as myfractal.py:

from metaflow import FlowSpec, card, pypi, step, current

from metaflow.cards import Image

class FractalFlow(FlowSpec):

@step

def start(self):

self.next(self.plot)

@pypi(python="3.9.13", packages={"pyfracgen": "0.0.11", "matplotlib": "3.8.0"})

@card(type="blank")

@step

def plot(self):

import myfractal

img = myfractal.make_fractal()

current.card.append(Image.from_matplotlib(img))

self.next(self.end)

@step

def end(self):

pass

if __name__ == "__main__":

FractalFlow()

We import our custom module, myfractal.py, in the plot step. Naturally the plot

step can execute only if the myfractal.py module is accessible by it.

Notably, as long as both the flow file and its supporting modules and packages are in the same directory, or in subdirectories under the flow directory, they fall in the dark blue area in the diagram above which Metaflow packages automatically. For details about this packaging logic, see Structuring Projects in the Metaflow documentation.

If you are curious, you can execute

python fractalflow.py --environment=pypi package list

to see what files Metaflow includes in its code package

automatically. Note that Metaflow includes the metaflow library itself and all

extensions installed to guarantee consistent execution across environments.

Including libraries and frameworks

The myfractal.py module won't work unless it can import the pyfracgen and matplotlib

packages. While you could easily install them manually on your local workstation - just run

pip install pyfracgen matplotlib - there are a few challenges with this approach:

You can't execute the code in the cloud as the packages are only available on your local workstation.

Your colleagues can't execute the flow without knowing what exact packages are needed. Or, you can't execute the code on a new laptop! The project is not reproducible.

You can't deploy the code in production. Even if the packages were available, we should isolate production deployments from any abrupt changes in 3rd party libraries - maybe

matplotlibwill release a new version that will break the code.

Outerbounds provides a few different ways to address issues like these related to 3rd party

dependencies, as outlined in Managing Dependencies. In this example,

we use the @pypi decorator

to include the libraries in a reproducible manner.

To run the flow, execute

python fractalflow.py --environment=pypi run

This may take a few minutes initially, as a virtual environment is created and cached including the libraries. Take a look at Runs to see the resulting fractal!

If you are curious, you can execute the flow in the cloud too, without having to do anything extra:

python fractalflow.py --environment=pypi run --with kubernetes

Notably, the @pypi decorator used above doesn't just pip install the packages on the fly in the

cloud, but it creates and caches a stable, production-ready execution

environment automatically,

which is isolated from any changes in the upstream libraries.

Summary

By following the patterns documented here, your projects will benefit from

- Software engineering best practices through modular, reusable, testable code.

- Easy cloud execution - no need to worry about Docker images manually.

- Stable production environments that are isolated from libraries changing over time.