Deploy to production

All the examples this far have been run manually. A defining feature of production deployments is that run automatically and reliably without human supervision. A core component of Outerbounds is a highly available workflow orchestrator that allows you to deploy any flow to production with one command.

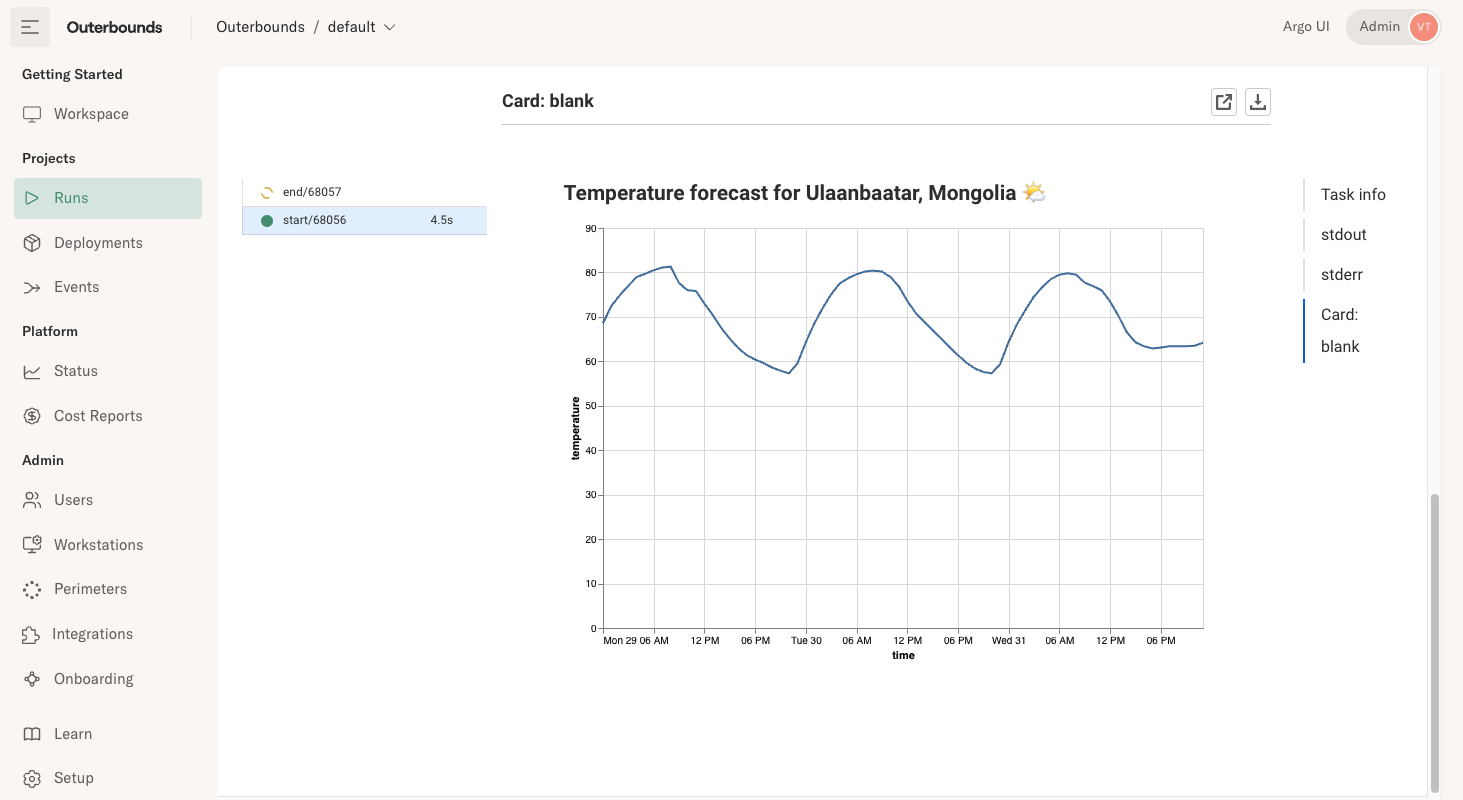

Example: Weather forecast 🌤️

Weather forecasts are a great example of an inference workflow that needs to run in production, without failures, at a regular cadence. Although you could train a weather forecasting model on Outerbounds too, for simplicity's sake we call an existing API to provide a forecast for a desired location.

Save this flow to weatherflow.py:

from metaflow import FlowSpec, step, Parameter, card, current, project, trigger, schedule, retry

from metaflow.cards import Markdown, VegaChart

GEOCODING = "https://geocoding-api.open-meteo.com/v1/search"

FORECAST = "https://api.open-meteo.com/v1/forecast"

CHART = {

"$schema": "https://vega.github.io/schema/vega-lite/v5.json",

"width": 600,

"height": 400,

"mark": {"type": "line", "tooltip": True},

"encoding": {

"x": {"field": "time", "type": "temporal"},

"y": {"field": "temperature", "type": "quantitative"},

},

}

# ⬇️ Enable this decorator if you want hourly forecasts

# @schedule(hourly=True)

@trigger(event="forecast_request")

@project(name="weather")

class WeatherFlow(FlowSpec):

location = Parameter("location", default="San Francisco")

unit = Parameter("unit", default="fahrenheit")

def parse_location(self):

import requests

resp = requests.get(GEOCODING, {"name": self.location, "count": 1}).json()

if "results" not in resp:

raise Exception(f"Location {self.location} not found")

else:

match = resp["results"][0]

self.latitude = match["latitude"]

self.longitude = match["longitude"]

self.loc_name = match["name"]

self.country = match["country"]

def get_forecast(self):

import requests

resp = requests.get(

FORECAST,

{

"latitude": self.latitude,

"longitude": self.longitude,

"hourly": "temperature_2m",

"forecast_days": 3,

"temperature_unit": self.unit,

},

).json()

forecast = resp["hourly"]

self.forecast = [

{"time": t, "temperature": x}

for t, x in zip(forecast["time"], forecast["temperature_2m"])

]

@retry

@card(type="blank")

@step

def start(self):

self.parse_location()

self.get_forecast()

current.card.append(

Markdown(f"# Temperature forecast for {self.loc_name}, {self.country} 🌤️")

)

CHART["data"] = {"values": self.forecast}

current.card.append(VegaChart(CHART))

self.next(self.end)

@step

def end(self):

pass

if __name__ == "__main__":

WeatherFlow()

The highlighted lines show production-related features of the flow:

You can enable the

@scheduledecorator to to run automatically every hour, day, or any other cadence.The

@triggerdecorator enables the flow to be .The

@projectdecorator allows many developers to work together, enabling that are safely isolated from each other.The

@retrydecorator automatically upon failure, e.g. to deal with the forecast API being unresponsive temporarily.

Developing flows safely in isolation

Before we deploy a flow to production, it is a good idea to test it first. Run:

python weatherflow.py run --location ulaanbaatar

Try it with your favorite place! The result is stored as artifact, forecast, and

presented as a card:

Notably a test run like this is isolated from production by default, thanks to . You can keep further developing and running the flow in your development environment after we have deployed the flow to production, knowing that development won't interfere with production. All artifacts live in their own namespaces by default.

Deploy to production

Let's deploy the flow to production. Just execute

python weatherflow.py argo-workflows create

The flow is now deployed to run automatically without any human intervention! You can turn off your laptop and workstations, and the flow will run without interruptions.

You can see all production deployments in the Deployments view.

You can notice that the @project decorator prefixes the deployment automatically with

your username, e.g. weather.user.aliceexample.com.weatherflow, by default to allow

multiple developers to create their own isolated deployments.

You can add --production flag to promote your deployment to be the singular

production version.

python weatherflow.py --production argo-workflows create

Or, you can use the --branch option to create branched deployments

e.g. for A/B testing.

Triggering a production run

Outerbounds provides a number of different ways to trigger a production run. Let's get started with an easy one.

On the command line, execute

python weatherflow.py argo-workflows trigger --location 'Las Vegas'

Note that you can pass any parameters to the trigger command. A key

difference between run and trigger is that the latter starts a

production run that is independent from your laptop or a workstation.

Even if you shut down your computers, the run will keep running.

You can see triggered runs in the Deployments view a few seconds before they show up in the Runs view, as the runs may take a while to get scheduled.

You can also trigger the flow by hand in the Deployments view by clicking Trigger next to a flow name, as shown in this clip:

Deploying with stable production environments

An important reason for taking care of dependency management, as we covered in defining the environment, is to ensure stable and reproducible production environments.

You can use the @conda/@pypi approach or custom images

to define production environments. For instance, you could deploy

our earlier example, TorchTestFlow, to production

as follows (the environment variable MYIMAGE is defined for readability):

export MYIMAGE=763104351884.dkr.ecr.us-east-1.amazonaws.com/pytorch-training:2.3.0-gpu-py311-cu121-ubuntu20.04-ec2

python torchtest.py --with kubernetes:image=$MYIMAGE argo-workflows create

Note that the --with option comes before the argo-workflows command.

GitOps & continuous delivery

In a more serious environment, users don't call argo-workflows create directly

on their workstations. Instead, the deployment happens through a CI/CD pipeline

such as GitHub Actions.

Outerbounds gives you tools to separate staging and production environments securely, test flows before deployment automatically, deploy A/B experiments, and setup end-to-end continuous delivery workflows. Read more here.

Also, occasionally things fail in production. Thanks to artifacts and consistent environments, you can and deploy fixes back to production quickly.

You are ready for action!

This concludes the onboarding tour. 🎉 At this point, you should be able to

Develop code in your existing environment or on an Outerbounds cloud workstation.

Scale to the clouds at any scale, GPUs included, using your favorite libraries.

Deploy flows to run automatically in production.

While this covers the path from prototype to production, we have only scratched the surface of Outerbounds features. Explore the documentation to learn more, and don't hesitate to reach out to your support Slack with any questions!