Natural Language Processing - Episode 3

This episode references the Python script baselineflow.py.

In the previous episode, you saw how we constructed a model in preparation for Metaflow.

In this lesson, we will construct a basic flow that reads our data and reports a baseline.

At the end of this lesson, you will be able to:

- Operationalize the tasks of loading data and computing a baseline.

- Run and view tasks with Metaflow.

1Best Practice: Create a Baseline

When creating flows, we recommend starting simple: create a flow that reads your data and reports a baseline metric. This way, you can ensure you have the right foundation to incorporate your model. Furthermore, starting simple helps with debugging.

2Write a Flow

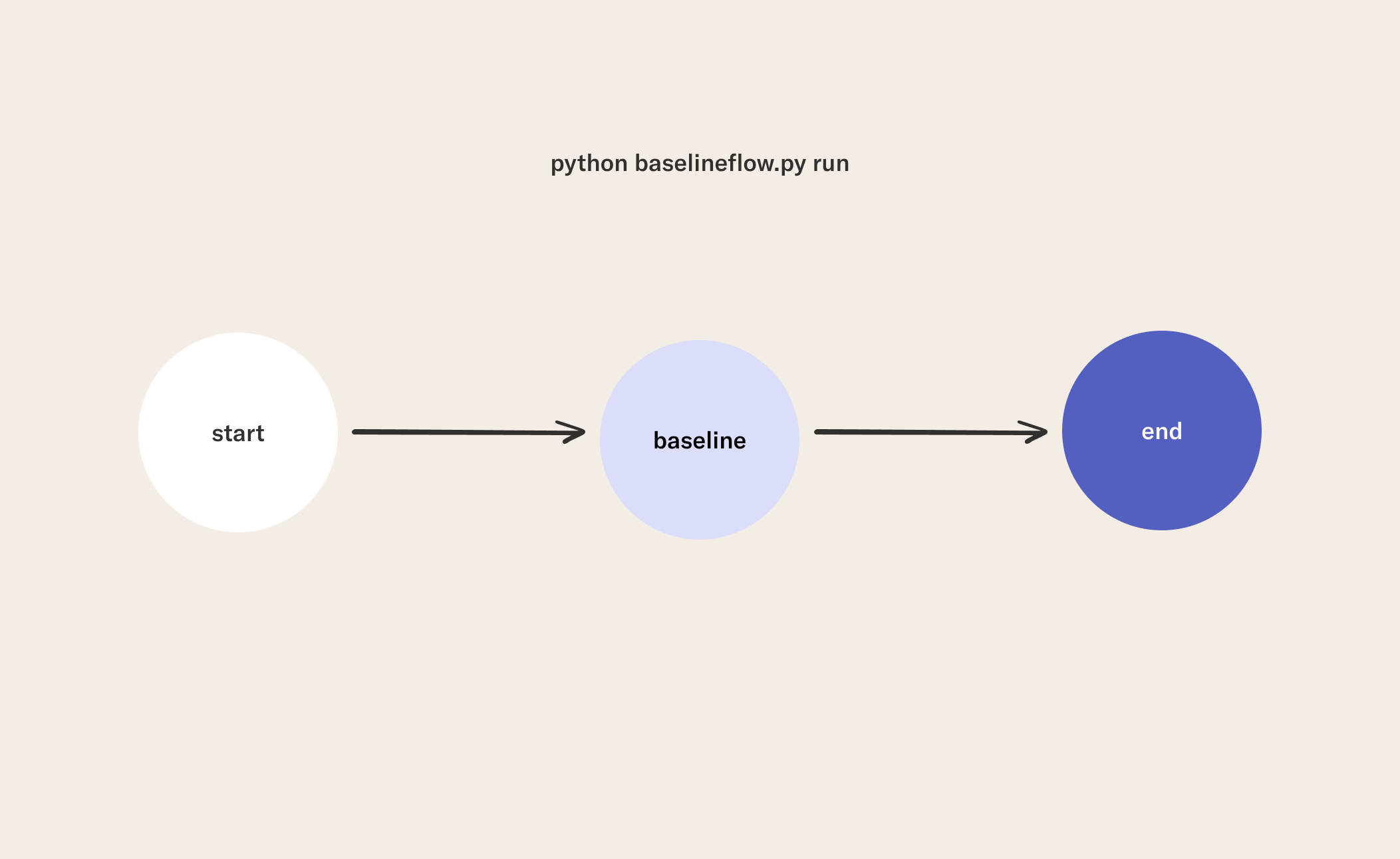

For our baseline flow, we have three steps including:

- a

startstep where we read the data, - a

baselinestep, and - an

endstep that will be a placeholder for now.

Below is a detailed explanation of each step:

- Read data from a parquet file in the

startstep.- We use pandas to read

train.parquet. - Notice how we are assigning the training data to

self.dfand the validation data toself.valdfthis stores the data as an artifact in Metaflow, which means it will be versioned and saved in the artifact store for later retrieval. Furthermore, this allows you to pass data to another step. The prerequisite for being able to do this is that the data you are trying to store must be pickleable. - We log the number of rows in the data. It is always a good idea to log information about your dataset for debugging.

- We use pandas to read

- Compute the baseline in the

baselinestep.- The

baselinestep records the performance metrics (accuracy and ROC AUC score) that result from classifying all examples with the majority class. This will be our baseline against which we evaluate our model.

- The

- Print the baseline metrics in the

endstep.- This is just a placeholder for now, but also serves to illustrate how you can retrieve artifacts from any step.

from metaflow import FlowSpec, step, Flow, current

class BaselineNLPFlow(FlowSpec):

@step

def start(self):

"Read the data"

import pandas as pd

self.df = pd.read_parquet('train.parquet')

self.valdf = pd.read_parquet('valid.parquet')

print(f'num of rows: {self.df.shape[0]}')

self.next(self.baseline)

@step

def baseline(self):

"Compute the baseline"

from sklearn.metrics import accuracy_score, roc_auc_score

baseline_predictions = [1] * self.valdf.shape[0]

self.base_acc = accuracy_score(

self.valdf.labels, baseline_predictions)

self.base_rocauc = roc_auc_score(

self.valdf.labels, baseline_predictions)

self.next(self.end)

@step

def end(self):

msg = 'Baseline Accuracy: {}\nBaseline AUC: {}'

print(msg.format(

round(self.base_acc,3), round(self.base_rocauc,3)

))

if __name__ == '__main__':

BaselineNLPFlow()

3Run the Flow

python baselineflow.py run

In the next lesson, you will learn how to incorporate your model into the flow as well as deal with branching for parallel runs.